A Tale of Two Airlines

In a recent month, Airline A proudly announced a “98% schedule reliability” for its flights – a figure that would reassure any traveller. Yet, hidden behind this impressive percentage was a catch: the airline had rescheduled over one-third of its flights during that period. Meanwhile, Airline B, operating under a stricter definition of reliability, reported a far lower 62% schedule reliability after accounting for numerous schedule changes and cancellations. These contrasting examples highlight a pressing issue in the aviation industry: the lack of a consistent, integrated approach to calculating and communicating key performance metrics like schedule reliability, on-time performance (OTP), rescheduled flights, and cancellations. This inconsistency not only confuses passengers but also risks eroding airline credibility and the reputation of the industry at large.

In Africa’s rapidly growing aviation sector, where improving passenger trust and operational transparency are vital, establishing common standards for performance metrics is more important than ever. This article examines how differing methodologies for measuring reliability and punctuality can mislead stakeholders, reviews global standards and regulatory frameworks addressing these issues, and advocates for a unified approach – including clearer definitions, greater transparency, and coordinated policy action – particularly for African carriers.

The Patchwork of Punctuality Metrics

What is “On-Time” and “Reliable”? The answer often depends on who you ask. At first glance, metrics like on-time performance and schedule reliability seem straightforward. Industry practice (largely led by organizations like OAG and Cirium) traditionally defines an on-time flight as one that arrives or departs within 15 minutes of its scheduled time. By this measure, a flight that pushes back from the gate 14 minutes late is officially “on time,” whereas 15 minutes or more counts as “late.” Notably, this 15-minute rule, though widely used, “hasn’t been determined by a committee at ICAO or IATA” – it became a de facto standard through common usage rather than formal adoption. The lack of an officially sanctioned global definition leaves room for variation. For example, some airlines and data providers historically excluded cancelled flights from on-time statistics, thereby boosting their reported performance. Inconsistent inclusion (or exclusion) of such factors means one airline’s 90% on-time rate might not equal another’s 90%, if the second airline had to cancel or reschedule more flights in the process.

Schedule reliability – often understood as the percentage of scheduled flights that were actually operated – is another metric with disparate interpretations. Many carriers calculate reliability simply as flights completed versus flights scheduled, effectively a “completion factor” that penalizes outright cancellations but not necessarily major delays or re-timings. By this measure, Airline A’s 98% reliability reflected that almost all flights eventually took off (a high completion rate), even if a large share did so at a different time than originally planned. Other airlines (like Airline B in the earlier example) use a more stringent definition: if a flight didn’t operate exactly as scheduled – whether due to cancellation or a significant rescheduling – it counts against reliability. This approach yields a much lower reliability percentage (62% for Airline B in April) because it treats substantial schedule changes as failures in reliability. The lack of alignment on what “counts” as a reliable, on-schedule flight leads to a patchwork of punctuality metrics, where airlines are effectively grading themselves on different scales.

Further complicating matters is the distinction between departure punctuality and arrival punctuality. Some performance reports focus on on-time departures, while others emphasize on-time arrivals (which are ultimately what passengers experience at destination). There is also variance in the reference point: using gate departure/arrival times versus runway (takeoff/landing) times. Industry best practice favors measuring from the gate, since that reflects the schedule promised to passengers. Using other benchmarks can inflate performance figures. Cirium, a leading aviation data firm, warns that using “runway arrival” time instead of gate arrival “does not conform to industry standards” and “often leads to overstated OTP”. In other words, if an airline measures arrival when wheels touch the runway, it might claim a flight was on time, even if lengthy taxiing meant passengers deplaned very late. Such methodological choices – whether to count a flight as on time at engine shutdown versus at gate, or whether a flight retimed to next morning is a “reschedule” or a “cancellation” – can make an airline appear more punctual or reliable than passengers actually perceive.

When Metrics Mislead: Impact on Passengers and Credibility

Inconsistent or opaque performance metrics are not just an academic issue – they have real impacts on passenger expectations and airline credibility. Travellers rely on statistics like “98% schedule reliability” or “85% on-time arrivals” to inform their choices. When those figures are achieved by creative math or differing definitions, passengers can be misled about the level of service to expect. For instance, an airline might advertise a high on-time departure rate, but a passenger may still encounter frequent delays in reality if those statistics ignored the delays that occur after leaving the gate or excluded certain chronically late connecting flights. As aviation analyst Peter Greenberg notes, “it all comes down to how on-time performance is calculated — and the metrics can be intentionally misleading.” He gives the example that a plane pushing back from the gate within 15 minutes counts as an on-time departure, “even if it’s on the runway for the next two hours.” From the passenger’s seat, of course, such a flight is anything but on time. When travelers discover these nuances, trust can quickly evaporate. A customer who felt duped by optimistic punctuality stats is less likely to believe an airline’s other claims and more likely to vent frustration in public forums, hurting the carrier’s reputation.

Airlines themselves also suffer credibility damage industry-wide when performance reporting isn’t consistent. In the earlier example, Airline A’s glowing reliability figure masked significant operational hiccups; informed observers (and certainly competitors) would question how meaningful a 98% reliability metric is if over 200 flights were shuffled around or delayed. This erodes confidence not just in that airline but in airline metrics generally. The industry’s reputation is at stake: if each carrier uses its own yardstick for success, any claims of “best on-time performance” or “leading reliability” become difficult to verify independently. It can also undermine competitive fairness – airlines that are more transparent might look worse on paper than those that game the numbers, creating a perverse incentive to adopt less scrupulous reporting practices.

Moreover, disjointed metrics impede effective policy making and oversight. Regulators and consumer advocates need apples-to-apples data to identify systemic issues and protect travelers. If, say, one airline considers a flight that was moved from 8am to 3pm on the same day as “on schedule” (since it still operated that day), but another airline counts that as a failure against reliability, comparing the two to identify who is truly more dependable becomes challenging. Passengers, too, are left in the dark – they cannot easily compare records when airlines publish only the metrics that flatter their performance. This lack of transparency and comparability ultimately dampens accountability. Without consistent benchmarks, poor performers can hide behind favorable definitions, and excellent performers don’t get the full credit they deserve.

An additional complexity has emerged in recent airline reporting practices: the increasing use of the term “reaccommodated” in place of “rescheduled” or “cancelled.” While the term may appear passenger-friendly, its ambiguity can obscure the true nature of a disruption. For example, when a flight is cancelled and the passenger is moved to a different flight or day, describing the outcome as “reaccommodation” avoids the regulatory implications of calling it a cancellation—especially where compensation or statutory care may apply.

This linguistic shift has significant implications for transparency. Passengers may not realise that their original flight was cancelled or delayed beyond compensation thresholds, and regulators may find it harder to track true operational disruptions. In a landscape already fraught with varied definitions of punctuality and reliability, the adoption of “reaccommodated” adds another layer of obfuscation that risks undermining the industry’s credibility.

To maintain clarity, performance metrics and communications should avoid euphemistic terms unless precisely defined in regulatory frameworks. A rescheduled or cancelled flight should be clearly labelled as such, regardless of whether a passenger was rebooked. Any deviation from the original agreed schedule—voluntary or not—should be transparently reported and counted in performance metrics.

Global Standards and Regulatory Frameworks: Closing the Gaps

The aviation industry is not starting from scratch in tackling performance metric inconsistencies. Internationally, a range of standards, regulations, and benchmarks have emerged to define and align metrics like on-time performance (OTP), cancellation rates, and schedule reliability.

On the industry side, IATA and ICAO have long provided guidance on terminology and operating benchmarks. A flight is generally considered “on time” if it arrives or departs within 15 minutes of the published schedule—a convention widely adopted, though not yet codified into a formal ICAO standard. Cirium and OAG, two of the most respected aviation data aggregators, have reinforced this convention by applying strict, uniform methodologies globally. For example:

Cirium includes cancelled flights as “not on-time” and tracks completion factors (i.e. the percentage of scheduled flights that actually operated), giving a more holistic view of an airline’s reliability. These metrics are published in reports like the Cirium On-Time Performance Review and the OAG Punctuality League, which have become de facto benchmarks for global airline performance.

These reporting models prevent airlines from omitting key disruption data or reframing poor performance using selective definitions. Airlines that deviate from these standards are easily exposed when held up against globally consistent benchmarks.

By contrast, Nigeria’s regulatory framework (NCAA CARs Part 19, updated 2023), while strong in consumer rights and service obligations (e.g. compensation thresholds, delay care standards), does not provide any definition or equation for calculating OTP or schedule reliability. There is no regulatory requirement to measure, report, or validate these figures consistently. This gap has created a vacuum in which airlines report their own performance using undefined, non-comparable methods—often overstating reliability through selective interpretation.

For example, some airlines have publicly claimed 98% schedule reliability while simultaneously rescheduling over 30% of flights, or failing to operate dozens of scheduled services. Others include only completed flights in OTP calculations, excluding delays beyond 15 minutes or cancellations entirely. This kind of selective disclosure, while not explicitly illegal, amounts to cross-industry gaslighting—where performance metrics serve marketing objectives rather than transparency or passenger truth.

This situation stands in sharp contrast to jurisdictions like the United States, where the Department of Transportation (DOT) mandates standardised monthly reporting from airlines. Airlines must calculate OTP using consistent rules and submit data on delays, causes, and cancellations. If they publish performance claims elsewhere (e.g. websites, booking systems), these must match their official DOT filings. This alignment ensures passengers can trust what they see—and that regulators can monitor systemic issues across the industry.

Similarly, in Europe and the UK, the EU261/UK261 frameworks define when a delay or cancellation occurs, what rights passengers have, and what counts as a significant disruption. While these laws don’t dictate how OTP must be calculated, they do prevent airlines from rebranding cancellations as reschedules to escape obligations—because any significant schedule change, if not communicated in time, triggers passenger compensation.

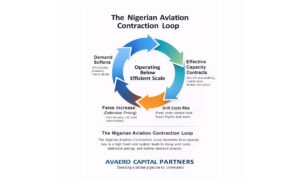

In Nigeria, despite the 2023 update of Part 19, the absence of standardised definitions and equations for OTP and schedule reliability means airlines continue to self-report metrics that flatter their operations without accountability. This damages public trust, misleads stakeholders, and undermines the credibility of airline performance claims across the board. This lack of co-ordinated approach towards industry improvement, based on consistent metrics, could be adding to the performance gap for Nigerian airlines when compared to other regions.

To close these gaps, aviation authorities—and regional bodies like AFRAA or AFCAC—should consider:

- Developing and enforcing standard metric definitions, including OTP and reliability calculations aligned with IATA or Cirium/OAG standards

- Mandating public reporting of performance data using agreed methodologies

- Prohibiting misleading use of undefined or marketing-driven terms (such as “reaccommodated”) that distort the nature of service failures

Until such steps are taken, airlines in the region will continue to operate in a fragmented reporting environment where data can be manipulated for optics, rather than used to drive real accountability or improvement.

Toward a Unified Approach in Africa

For African airlines and regulators, adopting a consistent, integrated approach to these metrics is both a challenge and an opportunity. The continent’s aviation industry is diverse – ranging from large international carriers to small domestic operators – and not all have historically tracked performance metrics with the same rigor. However, as more African airlines aspire to world-class service and compete on the global stage, there is a growing recognition that common performance standards are essential. A passenger flying within Africa should have the same clarity about what “on time” means as one flying in Europe or the U.S. African carriers, in particular, stand to gain credibility by proactively standardizing how they measure and communicate reliability. Rather than each airline devising its own scorecard on social media (as is the trend now, with monthly performance graphics on Twitter, Facebook, and LinkedIn), there could be an industry consensus on definitions: for example, all airlines agreeing that schedule reliability will mean “% of flights that operated as originally scheduled (no cancellation or date/time change),” and on-time performance will mean “% of flights departing/arriving within 15 minutes of schedule, measured at the gate.” Cross-industry agreement on these points would remove the current guesswork for the public. Encouragingly, discussions are already bubbling in industry forums – as noted in one aviation commentary, an IATA standard of 85% on-time is often cited as a target , indicating that African airlines are aware of global benchmarks even if they don’t formally follow them yet.

What steps can be taken to move forward? A multi-pronged strategy could help achieve alignment:

- Develop Pan-Industry Definitions: Through bodies like the African Airlines Association (AFRAA) or the African Civil Aviation Commission, stakeholders can convene to agree on standard definitions for on-time performance, schedule reliability, and what constitutes a “rescheduled” flight versus a “cancelled” flight. This could draw on ICAO/IATA guidance and be tailored to African operations. Having a published glossary of performance terms for African aviation would set a baseline.

- Transparent Reporting and Data Sharing: Airlines should be encouraged – if not mandated by regulators – to publish their performance metrics in a transparent manner. Rather than just a single percentage with potential fine print, carriers could disclose the underlying numbers: total scheduled flights, number operated, number delayed beyond 15 minutes, number cancelled. This level of transparency, as seen in the United States reporting system, prevents misunderstandings. Passengers would see, for example, that an airline with “96% schedule reliability” also had perhaps 4% cancellations (since 96% of flights were completed) . An industry-wide portal or database could even be established (perhaps under the auspices of a regional aviation authority) where monthly performance data from all African airlines is compiled for easy comparison.

- Regional Regulatory Alignment: African national regulators can incorporate standardized metric reporting into their consumer protection rules. Nigeria’s NCAA has already set a precedent by defining delays and requiring airlines to inform and care for passengers accordingly. Other countries or regional blocs (e.g. the East African Community’s Civil Aviation bodies or the proposed Pan-African aviation tribunal under the Single African Air Transport Market) could adopt similar provisions. If regulators harmonize their requirements – for instance, all insisting that any flight postponement to the next day is reported as a cancellation in performance stats – airlines will have to adjust their reporting to one consistent model. This regulatory push can level the playing field, so no airline has a local advantage by using laxer definitions.

- Engage with Global Initiatives: African carriers should also engage with global programs like IATA’s operational excellence workshops or Cirium’s data partnerships to improve data accuracy. By participating in global on-time performance analyses, African airlines can benchmark themselves and get independent verification of their stats. This external validation can be a confidence booster and reveal areas where definitions might differ. For example, if an African airline finds its internal OTP calculation doesn’t match what OAG or Cirium reports for them, that’s a sign to investigate and align methodology.

- Passenger Communication and Education: While technical definitions are being aligned in the background, airlines can take steps in the interim to communicate more clearly with customers. Instead of just quoting a percentage, airlines can explain what it means (e.g. “On-time performance 73% – this means 73 out of 100 flights arrived within 15 minutes of schedule in the last month”). Educating passengers that a “rescheduled” flight a day before departure is effectively a cancellation of the original flight (from the perspective of their rights) can also build trust. When passengers see that an airline is not trying to sugarcoat issues, they are more likely to remain loyal even through disruptions.

Charting a Reliable Path Forward

In the aviation business, consistency is credibility. As African aviation continues its growth trajectory, a unified approach to reliability and punctuality metrics will serve as a foundation for improved customer confidence and industry reputation. The current patchwork of definitions – where one airline’s 98% reliability might equate to another’s 85% – is untenable in the long run, especially as African carriers forge deeper partnerships and alliances (within Africa and with global airlines) that will inevitably draw comparisons. By learning from global standards set by organizations like IATA and ICAO and from regulatory frameworks such as EU261 and NCAA’s consumer protection rules, African aviation stakeholders can address the misalignment in performance reporting that has long plagued the industry. The aim is not to burden airlines with onerous reporting, but to ensure that when an airline says “we are on time,” it means the same thing across the board.

A more integrated approach will also help airlines internally: consistent metrics enable more effective identification of operational weak spots and facilitate sharing of best practices. If every carrier measures on-time performance the same way, they can collaborate and compete on improving it, rather than on improving the optics of it. For regulators and industry bodies, aligned metrics make it easier to spot systemic issues – be it air traffic inefficiencies or scheduling practices – and address them through policy or infrastructure investment.

Ultimately, a passenger in Lagos, Nairobi or Johannesburg deserves the same clarity about what to expect from their flight as a passenger in London or New York. Achieving consistency in how schedule reliability and on-time performance are calculated and communicated is a critical step toward that goal. It will require coordination, transparency, and yes, some advocacy to nudge everyone in the same direction. But the payoff is a win-win: passengers get honest, comparable information and a more reliable travel experience, while airlines and the African aviation sector gain credibility and trust. In an industry where time is literally money and reputation, embracing a standardized approach to reliability metrics is an investment in the future – one that will keep African aviation competitive, accountable, and passenger-centric in the years to come.

Disclaimer: The insights shared in this article are for information purposes only and do not constitute strategic advice. Aviation markets and circumstances vary, and decisions should be based on your organisation’s specific context. For tailored consultancy and guidance, please contact info@avaerocapital.com.